How to Measure Your Social Media Success

Apr 24, 2024Social media has become one of the most powerful tools a business can use for connecting with audiences, building brands,…

James Du Pavey wanted to be seen as one of the leading estate agents in the Midlands by modernising their website with a rebrand. We quickly understood their brief and used our expertise to deliver success. Find out more about the process and results in our case study.

Printdesigns’ key objective was to ensure web traffic levels were retained following the launch of their new website. By combining the resources of our digital marketing and studio teams’, Printdesigns experienced a seamless transition. Discover how we achieved our goal and how the new website performed.

We offer leading UX design and tailored digital marketing solutions to both B2B & B2C businesses. Delivering high-quality results isn’t just a promise, it’s in our DNA. Our scrupulously assembled team are equipped with years of experience, expertise, and a passion for delivering exceptional results.

Let’s identify your goal…

WEB DESIGN – Meet the demands of the modern user with expert UX website design.

SEO – Our SEO solutions are one of a kind and set the trends amongst the rest.

PPC – Our PPC marketing uses expert keyword analysis to drive more targeted traffic toward client websites.

SOCIAL MEDIA – Our aim with social media marketing is to give our clients exposure, character, and fantastic results.

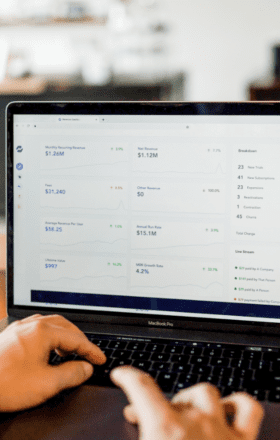

The journey we go on with our clients is something we take great pride in. We can do more than just build a beautiful new website. We’ll take a data-driven approach and make informed design decisions.

With our SEO and PPC support, we’ll conduct in-depth research on popular keywords and optimise your on-site content and ads to increase traffic. To improve your brand awareness and community engagement across multiple channels, we offer transformative social media management.

Wherever you need support, our highly skilled team of digital specialists will provide recommendations and innovative solutions to amplify your business online.

Social media has become one of the most powerful tools a business can use for connecting with audiences, building brands,…

Loading time is a huge contributor to the overall experience that users will have on your website, as well as…

By Carla Crichton, SEO Account Manager at West Midlands digital marketing agency, Extramile Digital. We’ve noticed an influx of spam…